Inverse Effect on Facial Recognition Algorithms

Link to Repo:

Two of the images from the testing set were inaccurately predicted to the wrong person. To calculate the inverse score I used the model's predicted probability of the image belonging to it's true class. These were the images that SVC model predicted wrong:

What is the Inverse Effect?

The inverse effect (Yin,1969) is a phenomena where it is more difficult to recognize a face when it is inverted compared to when it is upright. When this phenomena was first discovered in 1969 subjects were trained on inverse faces and then tested on unseen inverted images of faces that were already trained.

By calculating the differences in a model's probabilities of the accuracy of its predictions between an upright image and an inverted image, the inverse effect can be tested on facial recognition models.

Methods:

The VGGFace2 models were pretrained on 8631 identities and none of which were Bill Gates, nor Jeff Bezos, nor Elon Musk. It was trained on 3,300,000 images. There are three versions of the VGGFACE2 model: ResNet50, Senet50, and VGG16. Since the VGG16 version showed the most promise in being similar to the brain's ability to recognize faces, it was chosen as the model for the face embedding phase.

The first step in any facial recognition model is face detection. For face detection I used a Multi-Task Convoluted Neural Net on 15 distinct images of Jeff Bezos, Elon Musk, and Bill Gates. After the MTCNN model detected the faces, I then flipped the images horizontally to produce their inverse images. These images were then fed to the pretrained models to extract their facial embeddings.

I used transfer learning of the each model to extract the facial embeddings by using all the layers before the classification layer. The VGGFACE2 VGG16 version of the model output a 512 element vector representing the facial feature of the input image.

I normalized those embeddings and then used a Support Vector Classifier (SVC) with a linear kernel and its random state set to true to train the model on 44 images facial embeddings of each tech giant, where half were the inverted versions of the other half. The training set contained 132 images and the testing set contained 5 unseen images of each tech giant. The test set contained 30 images, where half of the images where the inverse versions of the other half. Once the model was fitted to the training set, I then performed the classification on the testing set and subtracted the model's predicted probability of the image belonging to its true class from the upright version of the image by its inverse version. If the result from the subtraction is positive, then the image induces the inverse effect. I will then do a one-sided one-sample t-test on these subtracted scores with a significance level of 0.05.

H0: μ = m0

H1: μ > m0 (upper-tailed)

I will reject H0 at α=0.05 if t > 1.761.

Face Detection Using a MTCNN Model on upright faces

Extract Face Embeddings from upright and inverted images using the VGG16 pretrained model

Support Vector Classifier fitted to the training set.

SVC predictions on the unseen testing set

Data:

I included 3 tech giants in the training and testing set:

-

Elon Musk (22 distinct images in the training set/5 distinct images in the testing set)

-

I chose Elon Musk because he doesn't have any abnormal facial features, does not wear glasses, and has an immense amount of photos of him online

-

-

Jeff Bezos (22 distinct images in the training set/5 distinct images in the testing set)

-

I chose Jeff Bezos because he has ptosis on his right eye and wanted to test the model on someone who doesn't have perfect symmetry in his face

-

-

Bill Gates (22 distinct images in the training set/5 distinct images in the testing set)

-

I chose Bill Gates because he wears glasses and I wanted to test the model on faces with and without glasses

-

Testing Set:

Results:

Two of the images from the testing set were inaccurately predicted to the wrong person. To calculate the inverse score I used the model's predicted probability of the image belonging to it's true class. These were the images that SVC model predicted wrong:

Predicted this was Jeff Bezos.

Predicted this was Elon Musk.

Inverse Score =

SVC Model's Predicted Probability of the image belonging to it's true class for the Upright Image

SVC Model's Predicted Probability of the image belonging to it's true class for the Inverted Image

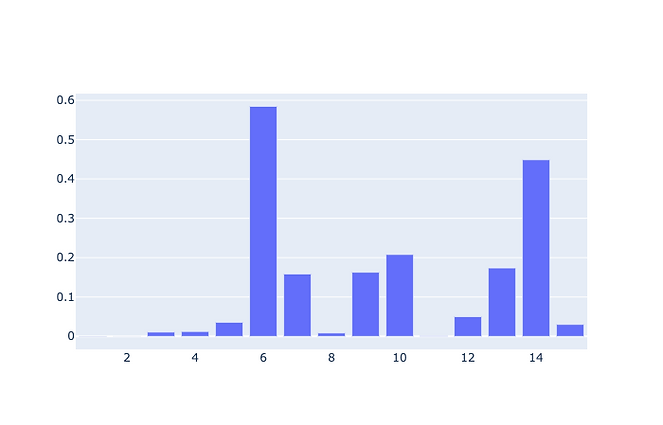

Only two images in the testing set did not actually induce the inverse effect. The inverse score was positive, on average, 0.125 units. For a significance level of 0.05 and 14 degrees of freedom, the critical value for the t-test is 1.761. Since the value of our test statistic (2.752) is greater than the critical value (1.761) we reject the null hypothesis. We conclude that the mean difference in probabilities is greater than 0, meaning that it does induce the inverse effect. Our results are significant with a p-value of 0.005.

.png)

Inverse Score

Testing Images Inverse Score Bar Plot

Discussion:

The SVM classifier induces the inverse effect that is evident in humans. This is surprising considering that the model was trained on the inverse versions of all of the images in the training set. The classifier phase shows evidence that it recognizes faces in a similar manner as seen in the human brain.

Future Direction:

One limitation of this study is that it only included caucasian males and future research into the effects of adding more races and females into the testing set would give a better analysis of the model as a whole. One possible future direction would be to compare other facial recognition models to see if they also show evidence of the inverse effect being present in its classification phase of their models.